The host model and its stolen copies output the same prediction on the trigger set

Abstract

Deep neural network (DNN) watermarking is an emerging technique to protect the intellectual property of deep learning models. At present, many DNN watermarking algorithms have been proposed to achieve provenance verification by embedding identify information into the internals or prediction behaviors of the host model. However, most methods are vulnerable to model extraction attacks, where attackers collect output labels from the model to train a surrogate or a replica. To address this issue, we present a novel DNN watermarking approach, named SSW, which constructs an adaptive trigger set progressively by optimizing over a pair of symmetric shadow models to enhance the robustness to model extraction. Precisely, we train a positive shadow model supervised by the prediction of the host model to mimic the behaviors of potential surrogate models. Additionally, a negative shadow model is normally trained to imitate irrelevant independent models. Using this pair of shadow models as a reference, we design a strategy to update the trigger samples appropriately such that they tend to persist in the host model and its stolen copies. Moreover, our method could well support two specific embedding schemes: embedding the watermark via fine-tuning or from scratch. Our extensive experimental results on popular datasets demonstrate that our SSW approach outperforms state-of-the-art methods against various model extraction attacks in whether trigger set classification accuracy based or hypothesis test-based verification. The results also show that our method is robust to common model modification schemes including fine-tuning and model compression.

Method

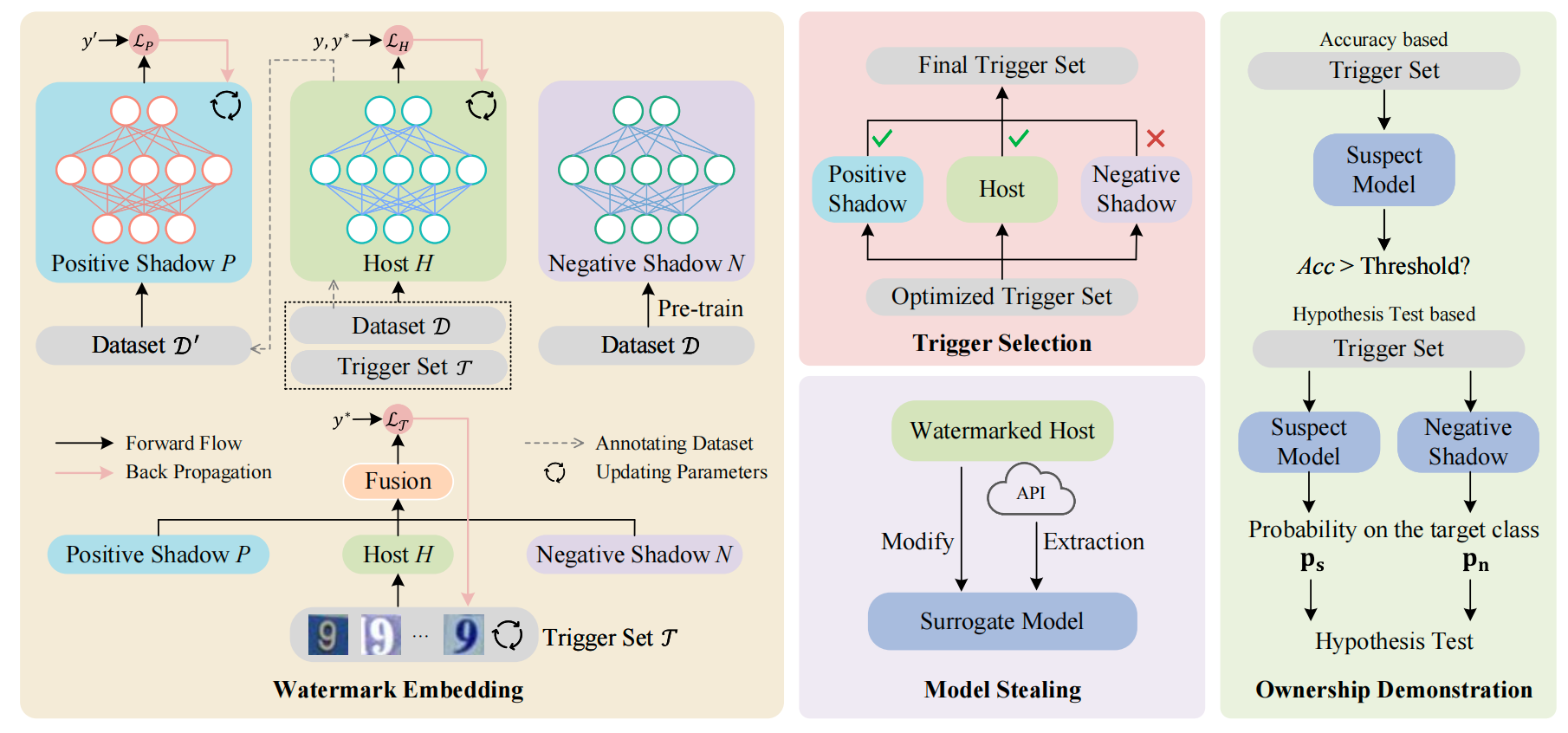

The overall pipeline of SSW

SSW algorithm involves three stages: watermark embedding, trigger selection, and ownership demonstration. In the watermark embedding stage, the host model

The trigger selection stage further selects samples that are more eligible for ownership verification. The ownership demonstration stage can be completed based on the classification accuracy on the trigger set or hypothesis test, depending on actual scenarios.

If you like the project, please show your support by leaving a star 🌟 !

BibTeX

@inproceedings{tan2023deep,

title={Deep Neural Network Watermarking against Model Extraction Attack},

author={Tan, Jingxuan and Zhong, Nan and Qian, Zhenxing and Zhang Xinpeng and Li Sheng},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

year={2023}

}